Paxos-based sharded ordered key value store with CAS

To build a key value storage each node runs a new instance of Paxos variable each time when the node receives a get or put request for an unseen key and redirects all further requests related to the key to that variable. Of course different instances on the same node should share the quorum size and the set of nodes and use unified membership change mechanism which generalises the Paxos variable’s version to work with a dynamic set of Paxos variables. For example its 2n+1 to 2n+2 transition is:

- Increase the quorum size.

- Generate an event on each node.

- Fetch all keys from the nodes up to the event, union them and sync all of them. Of course this operation may be optimized by batching and by parallel processing.

- Add a new node to the set of nodes.

Up to this point we designed a replicated key/value with strong consistency and per key test-and-set concurrency control primitive. Since the whole dataset fits one node nothing prevents us from maintaining the order on keys and support range queries.

The next step is to support sharding.

Sharding

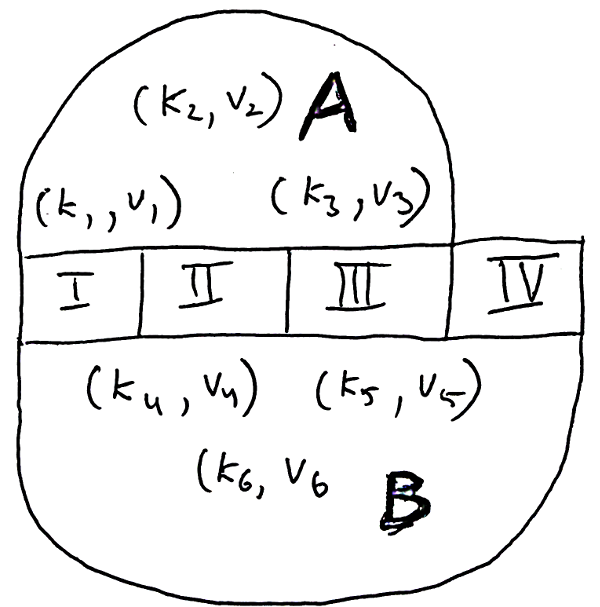

Imagine a key value storage that lives on three nodes.

Some day because of the storage usage or high load we will decide to split the storage. So we peek a key from the key space and split the key/value storage into two logical groups. First group (A) contains key less-or-equal to the key and the second group contains the rest (B).

Then we add a node to the B group.

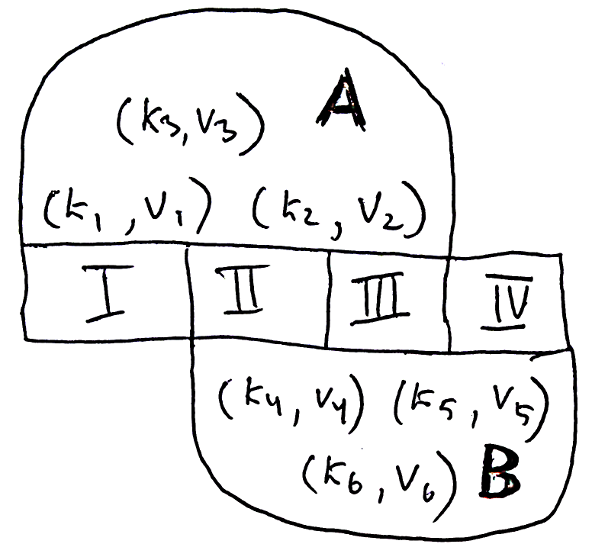

And remove a node from it.

And repeat the process until we get A and B clusters working on the different sets of nodes.

As a result we split the storage without stopping the cluster or loosing consistency.